Generative Pre-trained Transformer, or GPT, plays a vital role in modern artificial intelligence systems. This neural network-based model excels in predicting and generating human-like text. By analyzing prompts, it creates context-aware responses that feel natural and relevant.

Built on the Transformer architecture introduced by Google in 2017, GPT uses self-attention mechanisms to process entire sentences simultaneously. This allows it to understand and generate complex language patterns efficiently. Real-world applications, such as ChatGPT and Google Translate, showcase its versatility.

From GPT-1 to GPT-4, these models have evolved significantly, with parameter counts growing from 1.5 billion to over 175 billion. Today, GPT powers capabilities like code generation, creative writing, and dynamic conversations, making it a cornerstone of natural language processing and machine learning advancements.

Understanding GPT Technology

Transformers have revolutionized the way machines understand and produce natural language. These neural networks form the backbone of advanced AI systems, enabling them to process vast amounts of data efficiently. The term GPT stands for Generative Pre-trained Transformer, which highlights its core components: Generative (content creation), Pre-trained (massive datasets), and Transformer (architecture).

Definition and Overview

GPT models are designed to predict and generate human-like text based on input prompts. They leverage the Transformer architecture, introduced by Google in 2017, which uses self-attention mechanisms to process entire sentences simultaneously. This approach allows the model to understand context and generate coherent responses.

Unlike traditional methods like RNNs or CNNs, Transformers excel at handling long-range dependencies in text. This makes them ideal for tasks like translation, summarization, and conversation. The combination of deep learning and natural language processing has made GPT models a cornerstone of modern AI.

Historical Context and Evolution

The development of GPT models began with GPT-1 in 2018, built on the Transformer architecture. GPT-2, released in 2019, introduced 1.5 billion parameters and was open-sourced, showcasing its potential for generating high-quality text. GPT-3, launched in 2020, scaled up to 175 billion parameters, enabling capabilities like code generation and creative writing.

GPT-4, released in 2023, represents a significant leap forward. It is multimodal, capable of processing both text and images, and has achieved top 10% scores on the bar exam. The evolution of these models demonstrates the growing sophistication of large language models and their ability to handle complex tasks.

| Model | Year | Parameters | Key Milestones |

|---|---|---|---|

| GPT-1 | 2018 | 117M | Introduced Transformer architecture |

| GPT-2 | 2019 | 1.5B | Open-sourced, high-quality text generation |

| GPT-3 | 2020 | 175B | Code generation, creative writing |

| GPT-4 | 2023 | 1.8T+ | Multimodal, top 10% bar exam scores |

The increasing number of parameters in these models directly correlates with their ability to handle more complex tasks. This evolution highlights the rapid advancements in machine learning and the potential for future innovations in AI.

How GPT Technology Works

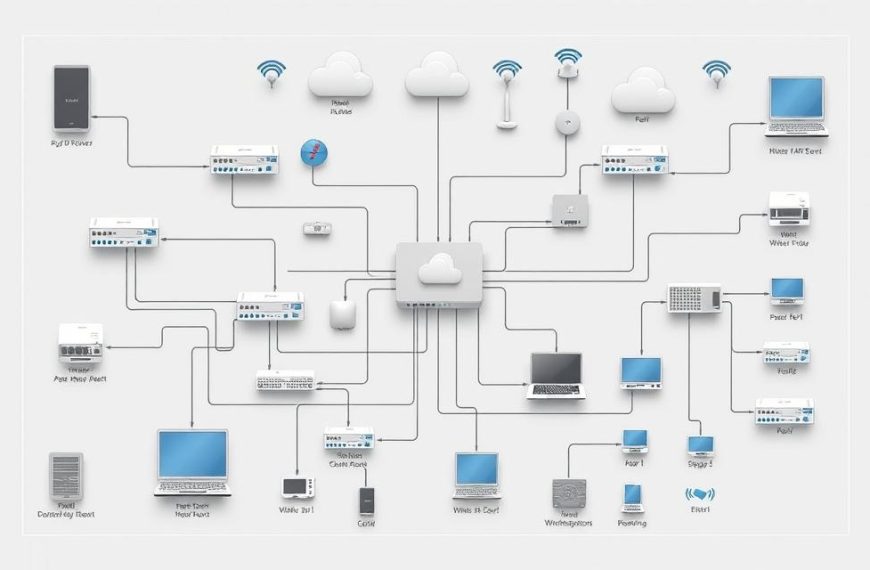

The inner workings of advanced AI systems rely on intricate processes that mimic human cognition. These systems use a combination of neural networks, training data, and advanced algorithms to generate text that feels natural and context-aware.

Neural Networks and Pre-training

At the heart of these systems are neural networks, which mimic the way the human brain processes information. During the pre-training phase, the model is exposed to vast amounts of training data from the web. This unsupervised learning phase helps the system understand patterns in language.

Tokenization breaks down input text into smaller units, such as words or subwords. For example, GPT-3 uses a vocabulary of over 45,000 tokens. This process ensures the model can handle diverse sentence structures and contexts.

Transformers and Attention Mechanisms

The transformer architecture, introduced in 2017, revolutionized AI by enabling parallel processing of entire sentences. Unlike older models like RNNs, which process text sequentially, transformers use self-attention mechanisms to weigh the importance of different words in a sentence.

For instance, in the input “dog chases cat,” the model uses self-attention to understand the relationship between “dog” and “cat.” This allows it to generate accurate output based on context.

Contextual Embeddings and Fine-tuning

After pre-training, the model undergoes fine-tuning for specialized tasks. This involves adjusting parameters to optimize performance in areas like medical Q&A or code generation. Fine-tuning ensures the system delivers precise and relevant responses.

Training these models requires immense computational power. For example, GPT-3 required 3640 petaflop-days of processing, utilizing Google TPUs and NVIDIA A100 GPU clusters. This highlights the scale of resources needed for advanced machine learning.

Applications of GPT in AI

Modern AI systems are transforming industries with their versatile applications. From automating tasks to enhancing creativity, these tools are reshaping how businesses operate. Let’s explore some of the most impactful use cases across various sectors.

Content Creation

AI models are revolutionizing content creation by generating high-quality text tailored to specific needs. For instance, marketers use these tools to produce blog posts with brand voice customization. E-commerce platforms leverage AI to create dynamic product descriptions for thousands of SKUs, saving time and resources.

Creative industries benefit from scriptwriting assistance and ad copy A/B testing. These applications highlight the adaptability of AI in producing engaging and relevant content.

Customer Service and Chatbots

AI-powered chatbots are transforming customer service by providing 24/7 multilingual support. Tools like Salesforce Einstein GPT automate responses, reducing support costs by 30-50%. These systems can answer questions efficiently, ensuring a seamless customer experience.

For more insights on how AI chatbots are used, check out this detailed guide.

Code Generation and Education

In the tech world, AI models like GPT-4 generate accurate Python code snippets with 90% precision. GitHub Copilot offers real-time suggestions and error corrections, making coding more efficient. In education, Khan Academy’s GPT-4-powered tutor system provides personalized learning paths, adapting to student performance metrics.

These advancements are empowering developers and learners alike, showcasing the potential of AI in technical and educational fields.

| Application | Example | Impact |

|---|---|---|

| Content Creation | Auto-generated blog posts | Customized brand voice |

| Customer Service | Salesforce Einstein GPT | 30-50% cost reduction |

| Code Generation | GitHub Copilot | Real-time suggestions |

| Education | Khan Academy Tutor | Personalized learning |

Why GPT is Important

The integration of GPT into daily workflows is transforming efficiency. By bridging the gap between humans and machines, these models enable seamless communication and task automation. This shift is not just about convenience; it’s about unlocking new possibilities across a wide range of applications.

Bridging the Gap Between Humans and Machines

GPT models act as a natural language interface, making advanced technology accessible to everyone. For example, GPT-4 achieves a 90th percentile score on SAT reading and writing, showcasing its ability to understand and generate complex information. This democratization of tech access is breaking barriers and empowering users.

With support for 95 languages, GPT is eliminating language obstacles in global communication. Whether it’s translating documents or facilitating multilingual customer support, these models are fostering inclusivity and collaboration.

Revolutionizing Various Industries

GPT’s impact spans multiple industries, driving innovation and efficiency. In healthcare, it accelerates drug discovery by analyzing vast datasets. Financial institutions use it for real-time SEC filing analysis and risk prediction, saving significant time and resources.

Manufacturing benefits from optimized supply chain communications, while media organizations leverage GPT for automated fact-checking and investigative journalism support. These applications highlight the versatility of artificial intelligence in solving real-world challenges.

| Industry | Application | Impact |

|---|---|---|

| Healthcare | Drug discovery analysis | Accelerated research |

| Finance | SEC filing analysis | Real-time insights |

| Manufacturing | Supply chain optimization | Improved efficiency |

| Media | Fact-checking | Enhanced accuracy |

Ethical considerations, such as bias mitigation and content verification, are also being addressed. As GPT continues to evolve, its economic impact is projected to boost global GDP by $15.7 trillion by 2030, according to PwC.

Training GPT Models

Training advanced AI systems involves meticulous preparation and optimization to ensure high performance. The process begins with data preparation and extends to complex algorithms that fine-tune the model for specific tasks. This section explores the key steps in training these systems.

Data Preparation and Tokenization

The foundation of any training process is high-quality data. Models like GPT-3 use the Common Crawl dataset, which includes over 250 billion words from 60+ languages. This ensures the system can handle diverse input and generate accurate output.

Tokenization breaks down text into smaller units, such as words or subwords. Techniques like Byte-Pair Encoding handle rare or unseen words, ensuring the model can process complex sentences. Filtering toxic content via tools like the Perspective API ensures the data remains clean and reliable.

Self-supervised Learning and Backpropagation

During the learning phase, the model analyzes patterns in the data without explicit labels. This self-supervised approach allows it to understand context and relationships between words. Backpropagation adjusts parameters to minimize errors, using algorithms like AdamW for optimization.

Gradient clipping prevents instability during backpropagation, while mixed precision training (FP16/FP32) enhances memory efficiency. Distributed training across 1,000+ GPUs ensures the process is scalable and efficient.

| Training Technique | Purpose | Example |

|---|---|---|

| Tokenization | Break text into manageable units | Byte-Pair Encoding |

| Self-supervised Learning | Analyze patterns without labels | Common Crawl dataset |

| Backpropagation | Adjust model parameters | AdamW algorithm |

Evaluation metrics like perplexity scores and human assessments ensure the model performs well in real-world applications. This comprehensive approach highlights the complexity and precision required in training advanced AI systems.

Different GPT Models

The evolution of GPT models has significantly shaped the landscape of artificial intelligence. Each iteration has introduced new capabilities, pushing the boundaries of what these systems can achieve. From early versions to the latest advancements, these models have become indispensable tools in various fields.

GPT-1, GPT-2, and GPT-3

GPT-1, introduced in 2018, marked the beginning of this transformative journey. With 117 million parameters, it demonstrated the potential of neural networks in generating coherent text. However, its limitations included short text generation and occasional incoherence.

GPT-2, released in 2019, scaled up to 1.5 billion parameters. Its ability to produce high-quality text led to initial concerns about misuse, delaying its full release. Despite this, it showcased the power of large-scale models in natural language tasks.

GPT-3, launched in 2020, was a game-changer with 175 billion parameters. It excelled in tasks like code generation and creative writing, making it a versatile tool across industries. Its cost efficiency, at $0.002 per 1,000 tokens, further enhanced its accessibility.

GPT-4 and Beyond

GPT-4 represents the latest leap in this evolution. With a 128K context window, it can handle over 300 pages of text, making it ideal for complex tasks. Its multimodal capabilities allow it to analyze charts and diagrams, expanding its range of applications.

Future developments aim to integrate audio and video processing, creating truly multimodal systems. Custom variants like BloombergGPT, tailored for finance, highlight the adaptability of these models. Scaling laws, such as Chinchilla optimal training ratios, ensure efficient use of resources.

Energy efficiency improvements, reducing consumption by 50% per token, further enhance sustainability. These advancements underscore the growing sophistication of artificial intelligence and its potential to transform industries.

How to Use GPT Models

Harnessing the power of advanced AI systems can unlock endless possibilities for innovation. Whether you’re generating code, enhancing social media campaigns, or automating workflows, GPT models offer versatile solutions. Understanding how to access and utilize these tools effectively is key to maximizing their potential.

Using GPT-3 and GPT-4

GPT-3 and GPT-4 are powerful tools for a wide range of applications. With GPT-4, users can access a 128K context window, ideal for handling complex tasks. For $20/month, ChatGPT Plus provides seamless access to GPT-4, enabling users to generate high-quality output and answer questions efficiently.

API integration is straightforward, with REST endpoints and Python SDKs available. Prompt engineering best practices, such as adjusting temperature and top-p sampling, can refine results. Fine-tuning options on platforms like OpenAI Dashboard and Azure Studio allow customization for specific needs.

Accessing GPT-2 and Other Resources

GPT-2 remains a valuable resource for those seeking open-source alternatives. Platforms like Hugging Face offer over 15,000 pre-trained models, making it easy to experiment and integrate AI into your workflows. Open-source options like LLaMA 2 and Mistral 7B provide cost-effective solutions for developers.

Cost management is crucial when using these models. Pricing is often based on tokens or characters, so understanding usage patterns can help optimize expenses. Academic access programs and nonprofit discounts further enhance accessibility, ensuring these tools are available to a broader audience.

- API integration guide: REST endpoints and Python SDK

- Prompt engineering best practices

- Temperature and top-p sampling configurations

- Azure OpenAI Service compliance certifications

- Fine-tuning UI: OpenAI Dashboard vs Azure Studio

- Cost management: Tokens vs characters pricing

- Open-source alternatives: LLaMA 2 and Mistral 7B

- Academic access programs and nonprofit discounts

By leveraging these resources, users can save time and access valuable information quickly. Whether you’re a developer, marketer, or educator, GPT models can transform how you work and innovate.

Conclusion

The rapid advancements in artificial intelligence have reshaped how we interact with machines. GPT models, with their transformative NLP capabilities, have become essential tools across industries. From automating workflows to enhancing creativity, their impact is undeniable.

Ethical considerations, such as compliance with the EU AI Act, are crucial for responsible adoption. Emerging trends like Small Language Models (SLMs) are making AI more accessible and efficient. Enterprises are increasingly integrating these technologies to stay competitive.

Career opportunities in prompt engineering are on the rise, offering exciting prospects for professionals. Continuous learning resources, like Coursera specializations, help individuals stay updated. The collaboration between humans and AI continues to unlock new possibilities for future applications.

As machine learning evolves, the synergy between human creativity and AI’s analytical power will drive innovation. Embracing these tools responsibly ensures a balanced and impactful future.

FAQ

How does GPT differ from traditional machine learning models?

GPT leverages neural networks and transformers to process natural language more effectively. Unlike traditional models, it uses self-supervised learning and attention mechanisms to understand context and generate human-like text.

What industries benefit most from GPT applications?

Industries like customer service, content creation, education, and software development benefit significantly. GPT powers chatbots, assists in code generation, and enhances social media engagement.

What is the role of training data in GPT models?

Training data is crucial for teaching GPT models to understand patterns in text. It involves tokenization and pre-training on large datasets to improve accuracy and performance in various tasks.

How do transformers improve GPT’s capabilities?

Transformers use attention mechanisms to analyze relationships between words in a sentence. This allows GPT to generate coherent and contextually relevant output for diverse use cases.

What are the key differences between GPT-3 and GPT-4?

GPT-4 builds on GPT-3 with improved parameters, better contextual embeddings, and enhanced fine-tuning. It handles more complex input and delivers more accurate information across applications.

Can GPT models generate code for software development?

Yes, GPT models excel in code generation. They assist developers by writing snippets, debugging, and even creating entire programs, making them valuable tools in computer programming.

How does GPT handle natural language processing tasks?

GPT uses deep learning and neural networks to process natural language. It can answer questions, summarize content, and generate text that mimics human writing styles.

What resources are needed to train a GPT model?

Training requires vast datasets, powerful computing resources, and expertise in machine learning. Backpropagation and self-supervised learning are key techniques used during the process.

How can businesses integrate GPT into their operations?

Businesses can use GPT for chatbots, content creation, and customer service. Platforms like OpenAI provide APIs to access GPT models, enabling seamless integration into existing systems.

What are the limitations of GPT technology?

GPT models may struggle with bias in training data, generate incorrect output, or lack real-time updates. Continuous fine-tuning and ethical considerations are essential to address these challenges.